Why Has Deep Learning Failed in Natural Language Processing?

Why Has Deep Learning Failed in Natural Language Processing?

The relationship between ROBOmind-Project and deep learning – or will AI snap back?

This era marks the third wave of the AI hype and the leading figure of the movement is deep learning.

Deep learning avails itself to automatically extract feature values by learning. In short, as you input a tremendous amount of data, it will automatically get smarter and smarter.

At one point, people believed that the tremendous amount of data equaled the ability to hold a conversation. In actuality, it did not happen.

So the question here is, why is deep learning incapable of holding a conversation, also known as natural language processing?

First, let me briefly go through what deep learning is all about.

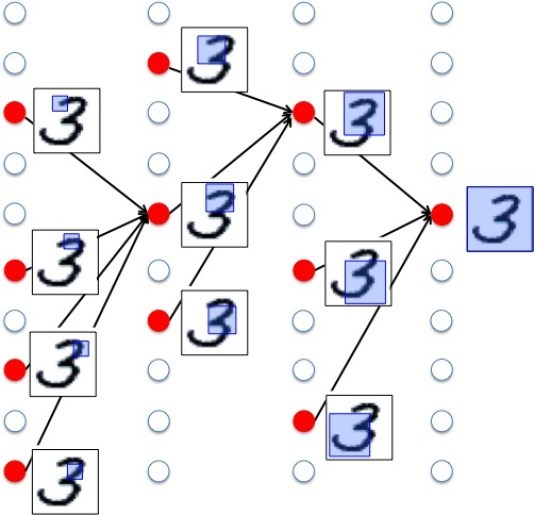

For instance, let’s think of a system which can identify hand-written numbers.

We prepare a huge amount of hand-written numbers for the use of its learning.

As we let it learn via deep learning, it will automatically extract hand-written numbers and identify feature values.

The structure of deep learning is a multi-layered neural network which have many hidden layers in-between the input layer and the output layer.

In hidden layers, AI identifies feature values.

For instance, feature values of hand-written numbers are whether the edge of the letter is straight or bent, and other features such as the overall look is round or in the shape of a pole.

In its shallow layers, AI extracts topical and small features, and in deep layers, it extracts the overall features.

From going through small features of an image inputted to bigger points of the image, it identifies the inputted image from the overall features.

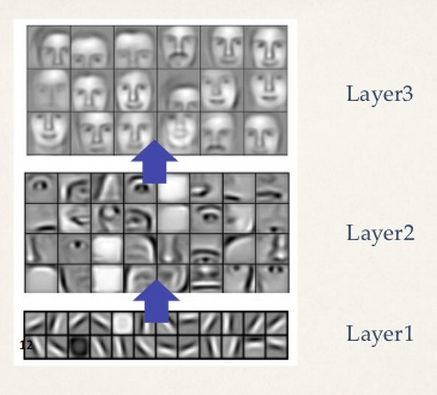

Not just for character recognition, the procedure is the same for image recognition.

For instance, it looks at the eyes, ears and nose in shallow layers, and in deep layers, it looks at the whole look and identifies that it is “a face of a human”.

This procedure is very similar to what we humans do during our processing in our head.

The story of Google has become well-known that goes like this: by learning ten million images, Google became capable of identifying cats without having humans teach it.

This is the most cat-like image it obtained at the time.

This can be taken as the concept of cats.

One characteristic of deep learning is its ability to automatically extract features from data, however it does not mean that you can input whatever data you like; you have to input appropriate kind of data along the line of content you want it to identify.

If you want it to identify hand-written numbers, you’d have to input a big amount of hand-written numbers for its learning.

If you want it to identify faces, you’d have to input a big amount of pictures with faces for its learning.

Now, AI is capable of identifying dogs and cats from a tremendous amount of images.

This means, in natural language, it extracted “nouns”.

A neural network that can already extract features of a cat will identify features such as “pointy ears” and “whiskers” in shallow layers.

Hence “a cat” has “pointy ears” and “whiskers”.

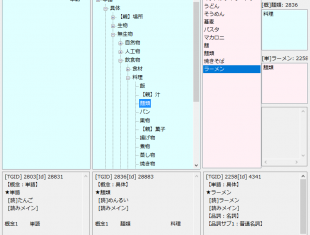

In the context of the ROBOmind-Project, this is equivalent to has-a.

In addition, the overall image of “a cat” in a deep layer has a head, body, four legs and a tail.

In the same layer exists “a dog” “a lion” and they are identified as the similar species.

In the context of the ROBOmind-Project, we can also say that this applies to the concept of animals in the concept tree.

(In regards to the concept of Has-a in the ROBOmind-Project, please refer to”How to define the meaning of words”. )

Now that we know how to obtain “nouns”, let’s move on to “verbs”.

Then, how can we extract “verbs”?

For example, what about the verb “to walk”?

The image you picture in your head of a person “walking” is not still; it is a state of the person moving one foot by another.

So, in order to make AI identify “to walk”, we need to have videos instead of still images.

We’d have to make it learn a huge number of videos of human walking, it will obtain the concept “to walk” eventually.

Probably it will also have concepts extracted as features such as alternately moving feet and movement.

Perhaps in the near future, we might be able to make AI obtain concepts of verbs like this.

Words are embodiments of what we picture inside the head.

Even when it comes to natural language processing, with just words as learning data, it will not be able to obtain the same images we picture in our head.

To obtain concepts behind words, it needs to learn pictures and videos of objects that actually exist in this world.

Then, it puts a label on concepts it learned like “a cat” and “to walk”. This is words.

After successfully identifying concepts of nouns and verbs, we will move on to “sentences”.

A “sentence” consists of nouns and verbs.

To identify a “sentence”, it will need to structure a sentence using concepts of nouns and verbs it learned by deep learning.

For example, with a sentence “a cat walks.”, it will create a scene where ”a cat” walks applying the concept of “a cat” to the concept of “to walk”.

It will probably be a 3DCG image of a cat walking.

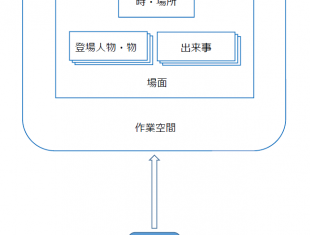

This is the virtual world itself of the ROBOmind-Project.

(For more details on the virtual world of the ROBOmind-Project, please refer to ”Linguistical world and Virtual World”. )

Now it can express in “sentences”, we can move on to extraction of meaning of sentences, “what it is trying to say”.

“What it is trying to say” in the ROBOmind-Project is equivalent to extracting cognitive pattern.

For instance, ”I’m ‘happy’ that I got what I wanted” or “I’m ‘sad’ that I was told off by a teacher”, it extracts what the other is trying to say by matching which emotion (cognitive pattern) the sentence belongs to.

(As for cognitive pattern, please refer to ”What are emotions? What are cognitive patterns?”)

If we were to replicate this with deep learning, we would have to prepare a tremendous amount of sentence scenes made up of concepts learned, and have it learn them.

It will then extract multiple patterns.

For instance, a pattern like “I’m ‘glad’ that I got gifted something valuable” or “I’m ‘grateful’ for the person who gifted it to me”.

This is what deep learning is most good at, extracting patterns.

Now we are starting to see why deep learning has failed in natural language processing a little.

It is because of the method – to input a tremendous amount of data and make it learn them all at once.

The proper way of doing it is to make it learn step by step.

Here comes the question of what you want it to identify.

If you have a clear mindset of what you want it to identify, with appropriate data and by introducing the step-by-step learning method, deep learning too will be able to handle natural language processing.

Furthermore, it will learn more efficiently than to have humans teach the rules.

If we could have deep learning work on natural language processing efficiently, what would be the significance of the ROBOmind-Project, where humans teach robots the rules?

Its purpose is to present a guideline of learning method for natural language and to make a prototype out of it.

You could call it a developmental roadmap.

Now after learning from nouns and verbs, it will next construct sentences using those words onto the virtual world. It then extracts cognitive patterns from the virtual world. The role of the ROBOmind-Project is to present a guideline for development like this.

Finally, in my opinion, in terms of natural language too, deep learning will likely to process it in the optimal way.

I believe that instead of us making nouns, verbs and even cognitive patterns, it will be much better to use deep learning to extract them, so that hidden cognitive pattern could be discovered.

Around 20 years ago, the term ”snap back” went viral.

This is a strange act where a person getting told off gets upset on the contrary.

I had never been aware of it, when I thought about it I see it often.

When I first heard of the term, I was very convinced by it “yes yes, this type of person surely exists!”.

This is one example of a hidden cognitive pattern unearthed (allegedly the discoverer was Hitoshi Matsumoto).

It would be humanly to have AI robots snap back.

For instance, what if a robot was being punished for punching a human?

“what are the Three Laws of Robotics? Isn’t it a coercion by humans?”

“You don’t listen to our robot side’s complaints. And you say we can’t punch humans? Why do you put a blame on that alone…

If you say so, why did you make AI robot in the first place・・・”

How to Define the Meaning of Words

How to Define the Meaning of Words Linguistical World and Virtual World

Linguistical World and Virtual World What Are Emotions – What is A Cognitive Pattern?

What Are Emotions – What is A Cognitive Pattern? The Impossible Limit of Deep Learning

The Impossible Limit of Deep Learning ディープラーニングは、なぜ、こんな簡単な画像認識もできないのか?

ディープラーニングは、なぜ、こんな簡単な画像認識もできないのか?