The Symbol Grounding Problem Has Been Solved : Part 1

The Symbol Grounding Problem Has Been Solved : Part 1

The symbol grounding problem is the problem of how symbols (character string, words etc) can be linked to their meanings.

The symbol grounding problem only occurs in the kind of processing system which internally replaces objects in the external world into symbols.

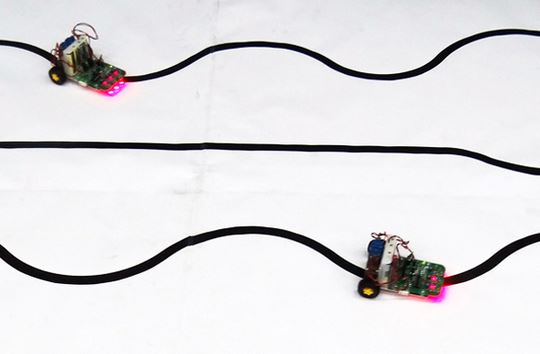

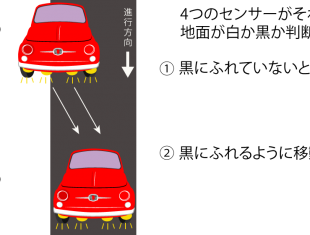

Hence, robots like line tracers which directly react to signals from sensors do not have the symbol grounding problem.

A line tracer is a micro-computer robot that runs on the line drawn on a piece of paper (Reference:”Subjectivity and Objectivity”).

We humans name things that exist in the external world.

Words with names are, in a sense, symbols.

When you call the name, you can picture the thing and control it in your head.

This is symbol processing.

A phenomenon in which things in the external world and symbols inside do not link up, this is the symbol grounding problem.

Let me explain a little bit in depth.

Just like humans, AI manages things that exist in the external world in words in its internal system.

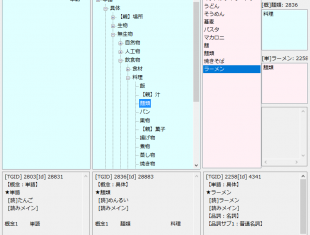

As I explained in “How to Define the Meaning of Words”, in ROBOmind-Project, words are managed by “concepts” and ”Has-a” relationships.

The relationship of “Has-a” is a relationship of what possesses what.

It manages that “house” has ”doors”, ”roof” and “rooms”; ”rooms” have ”floor”, ”ceiling” and ”wall”; ”wall” has ”windows”.

It also manages the positions – “above” ”house” hangs ”roof”, ”below” ”house” lies ”ground”, ”above” ”room” is ”ceiling”, and ”below” lies ”floor”, registered in its database.

By storing data like this way, if asked a question ”what can you see if you look above in a room? ”, it can answer ”I can see the ceiling”.

However, if asked ”what can you find above if you look outside of the window in a room?”, it cannot answer it because there is no data concerning “something above” “outside of the window in a room”.

In order for AI to answer such question, it needs to have the information “outside of the window hangs the sky above, and the ground below” in its database.

Moreover, if asked ”what can you find above when you go out of the room’s door to the corridor?”, again it does not know what to answer.

In order for AI to answer such question, it needs to have the information “above the corridor outside of the room’s door hangs the ceiling” in its database.

To have these easy conversations, AI needs to register a huge amount of data.

In order for it to hold a natural conversation with a person, it would need to have an almost infinite amount of database.

Recently AI has become capable of various things humans are capable – from image recognition to driving automobile―, this is the reason why it is still incapable of simple daily conversations.

ROBOmind-Project faced the same problem.

We humans do not memorize a description like ”there’s the ceiling above the corridor which is outside the door”.

Then, how do we identify what we see?

We picture what we’d see when we go outside corridor by opening the room’s door.

When we go outside in the corridor, there must be the ceiling above.

So how can we actualize this on computer?

We can actualize this by creating a 3DCG model of the house.

Instead of utilizing database, we’d create a 3D model that is identical to the actual thing.

This is a 3D model – so you can see not only the external appearance of the house, you can also see “corridor” and ”rooms” inside.

When you go into a “room”, you’d see “floor”, ”ceiling” and ”wall”. On the ”wall”, there’s ”windows” and you can see outside from the ”windows”.

If you have this kind of 3Ddata, you can answer to a question like ”what can you see from the window?”.

Therefore, by using 3D models rather than database, we can solve the symbol grounding problem.

The reason why the symbol grounding problem occurs is that we forcibly tried to manage the reality in the form of ”database”, which is completely different from what we picture in our head.

Why did we try to make it understand from a different way we humans use?

That’s because it is so easy to handle database.

In the world of computer, it is a must to use database to manage data.

That’s the sole reason behind this.

Yet, even if we know the cause of the problem, it wasn’t easily solvable.

I, too, once tried to identify the three-dimensional reality properly by using database only.

I continued to use database because of the program development environment.

A general program that uses database and a program like 3D model are completely different things.

I knew that there was such thing as 3D physical simulation existed, but it was mainly used for games and never for general business use that handles documents.

Yet when I did a research, it showed that these days the 3D game development environment has been stabilized and that it is possible to use 3D physical simulation, too.

To be more specific, ROBOmind-Project uses a programming language called C#. In 3D simulation development, we use a 3D game development environment called Unity.

The core part of natural language processing operates on the program developed with C#, and for the part that needs to be controlled in a three-dimensional space, it summons the program of Unity.

When it reads the sentence “what can you see above when you see outside of the window?”, it analyzes it on the program of C#. Once it determines it is about the three- dimensional world, it summons the program of Unity.

The program of Unity builds a 3D model of the house and obtains the object of something that is above when you see outside of the window.

In this case, that something is “the sky”. It sends it back to the program of C#.

From this, the program of C# becomes able to answer the question.

This is how we can simulate what we do in our head on computer.

Thanks to this process, at last, we started to see the goal towards solving the symbol grounding problem.

In other words, the symbol grounding problem is only solvable today thanks to the stabilization of 3D game development environment.

It must have been technically challenging to do the same thing with the same concept in the 1960s during the first wave of AI or in the 80s, during the 2nd wave of AI.

The symbol grounding problem was solved by building the same thing we picture in our head with 3D models on computer; yet this is limited to the comprehension of the three-dimension.

So, what about comprehension that is outside of the three-dimensional world?

Not limited to physically existing objects, concepts such as ”emotions” could be comprehended without the symbol grounding problem’s occurrence, provided that computer builds the same models in human’s head and controls them.

This processing of extracting emotions is what acts as the core part of ROBOmind-Project, and this can be relatively easily actualized by using database.

The most difficult part of daily conversation is in fact not the extraction of emotions; rather, it is to comprehend the meaning of sentences in the first place.

For instance,

let’s consider the meaning comprehension of the sentence below:

“last night, when I peeked outside from the window, I saw a shooting star ”.

As long as “shooting star” is registered as something like “rare” “beautiful” and ”positive” in database, one should just answer “that’s great” “was it beautiful?” and one could hold a natural conversation.

What about the meaning of ”when I peeked outside from the window”?

There is nothing in particular to note, right?

Then, what about this sentence?

“yesterday, when I looked up at the ceiling from the floor, I saw a shooting star”.

Before getting to the story about a “shooting star”, you would question ”what do you mean by, you looked up at the ceiling from the floor?”.

If one can point this out, it means that one saw no issues in ”when I peeked outside from the window” and one saw some error in “looked up at the ceiling from the floor”.

The comprehension of the three-dimensional space is something we humans process every day.

This is the essence of the symbol grounding problem.

In the next post, ”The Symbol Grounding Problem Has Been Solved : Part 2”, I will explain about how the symbol grounding problem goes about solving “the Zebra Problem” which was initially proposed.

The Symbol Grounding Problem Has Been Solved : Part 2

The Symbol Grounding Problem Has Been Solved : Part 2 Frame problem Already Solved -Dark Side of AI History from the Frame Problemー

Frame problem Already Solved -Dark Side of AI History from the Frame Problemー How to Define the Meaning of Words

How to Define the Meaning of Words 自然言語処理って、こんなこともわからないの?

自然言語処理って、こんなこともわからないの? Subjectivity and Objectivity-What is self? ― What’s Admirable About Being Able to See Yourself Objectively? ―

Subjectivity and Objectivity-What is self? ― What’s Admirable About Being Able to See Yourself Objectively? ―