The Symbol Grounding Problem Has Been Solved : Part 2

The Symbol Grounding Problem Has Been Solved : Part 2

This post is a continuation of the last post ”The symbol grounding problem has been solved : Part 1”.

This post will first use the original symbol grounding problem as an example and will explain the relationship between deep learning, the hype of Smart speakers and the hypothesis on consciousness’ virtual world.

The symbol grounding problem was coined by Steven Harnad in 1990.

He explains the problem using zebras as an example.

“Zebras” can be defined as ”a type of horse with stripes”. This is a definition of ”zebras” in words (symbols).

Even those who have never seen “zebras” before will recognize zebras for the first time – ”they are horses with stripes just like I’ve heard from people”.

So if we taught AI the definition of zebras, would it recognize zebras as “zebras” for the first time of encounter?

For AI, an explanation ”a type of horse with stripes” is simply a combination of words (symbols).

AI cannot connect this combination of symbols to ”zebras”, animals that exist in the actual world.

Here arises the symbol grounding problem.

Languages that we speak(natural languages) are composed of symbols; so long the symbol grounding problem remains solved, there will be no AI that can holds a natural conversation with humans.

Now let us go into how ROBOmind-Project goes about solving the symbol grounding problem.

In ROBOmind-Project, at first we teach AI that ”zebras are a type of horse with stripes”. In this phase, we only define the meaning of the word by symbols.

As explained in the last post, in ROBOmind-Project AI memorizes things in the reality in 3DCG.

So, it memorizes things like horses, elephants and house in 3DCG. These amounts to its knowledge.

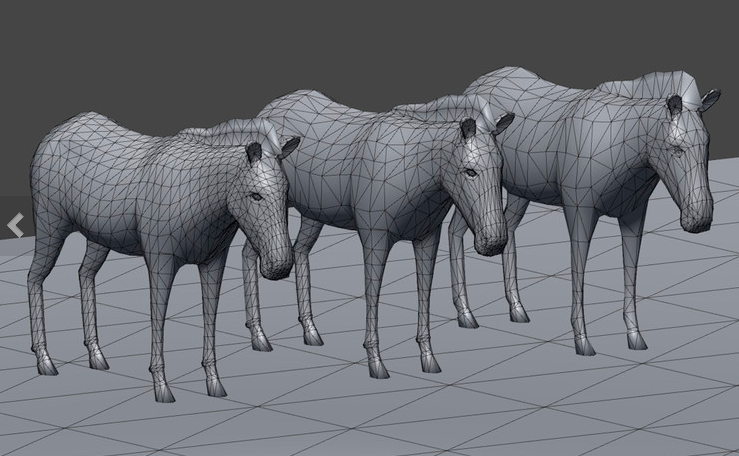

The picture below is zebras drawn in 3DCG.

The three-dimensional form of 3DCG is expressed in combination of different polyhedrons like this picture below.

3DCG is complete once we put the image on the surface of these 3D models. Images are called textures, and to put textures onto the surface is called texture mapping.

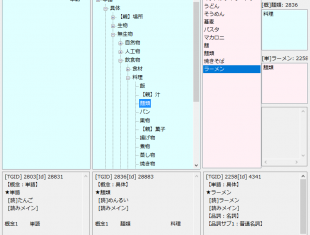

In ROBOmind-Project, as explained in ”How to Define the Meaning of Words”, we manage words by concepts: so ”zebras” belong under the concept of ”horse”.

In addition, as ”horses” exist in the three-dimensional world, they have their own 3D model in the form of three-dimension data.

Now let us look at how AI comes to identify zebras in the reality as zebras.

First, it takes a picture of ”zebras” in the real world and generates a 3D model from it.

Next, it compares the 3D model and a 3D model stored in its system as knowledge by its form, and if it matches the 3D model of “horses”, then it will be able to identify the object in front of it as a ”horse”.

The word “stripes” belongs under the concept of ”patterns”.

As explained above, 3DCG need to have texture on the surface of 3D model. Texture has colors and patterns drawn on it.

“Stripes” refers to the two-dimensional image pattern drawn on this texture. In words, it is ”a pattern that is made up of 2 + different colors crossed in parallel lines”.

In reality, the meaning of ”stripes” is not written in words; but in its image processing algorithm that is used for two-dimensional image’s pattern recognition.

The image processing algorithm in this case pattern-identifies patterns that have white and black vertical lines alternately appearing as black and white stripes.

Therefore, AI will be able to confirm that whether the surface of generated horses have such stripes in this image processing.

First AI will look at ”zebras” in the real world and confirm that they are “horses” from their forms; then, it will determine that the patterns on the horses are ”stripes” from pattern-matching, and finally identify that “these are zebras that I heard of before”.

As you can see, zebras in real life were able to successfully ground on the symbols of ”zebras” in its system without falling into the symbol grounding problem.

Generally in natural language processing, the meaning of words is defined by ”concept”(is-a) and ”what it has”(has-a). For instance, the meaning of ”horses” can be defined as ”a species that belongs to the concept of mammals, with four legs and hoofs…”.

Even with this detailed definition, unless words (symbols) like legs and hoofs do not connect to the actual objects in the real world, the word ”horses” will never be paired up with the actual horses.

In ROBOmind-Project, we expressed objects in the real world in 3DCG and gave it the symbol ”a horse”.

Hence, we were able to connect the actual object in the real world to the symbols in the system via its “forms” (3D model).

It is the same with the meaning of “stripes”.

“Stripes” is connected to texture used for 3DCG and is written in pattern-recognition algorithm for two-dimensional images.

The actual objects in the real world are connected to 3D models in the system via the forms of 3D models, we will be able to connect the pattern of 3D models to that of the actual objects in the real world.

Instead of expressing the meaning of objects in the real world by symbols but in 3D model, we were able to connect objects in the real world to symbols in the system.

These days in image recognition, deep learning is often used. Deep learning can determine whether the image shows ”zebras” or not. In this sense, deep learning, too, has solved the symbol grounding problem.

However, as zebras from the front and those from the back make completely different appearances, they cannot identify that both are the zebras.

On the contrary, if we turn zebras into 3D model, deep learning can identify that both zebras seen from the front and the back are the same ones seen from different angles. Moreover, by adding data such as walking zebras, running zebras and laying zebras, it will be able to identify zebras in any circumstances.

Now today we have AI systems that utilize languages.

Especially today, Google, LINE and Amazon have come up with their Smart speakers and snatched the public’s attention.

Smart speaker will turn on the air conditioner when you speak to it: ”please turn on the AC”.

Just like speaking to a human being.

So can we say Smart speakers solved the symbol grounding problem?

Let’s look at how Smart speakers understand words.

First we will connect an Smart speaker to the AC via Bluetooth. Then we will associate the word “AC” to it.

In addition, to turn on / off the AC, we will associate the phrases “turn on” and “turn off”.

From this setup, this Smart speaker, when told to “turn on the AC”, will extract the words “AC” and “turn on”, and will turn on the switch of the said “AC”.

Does this indicate that the speaker solved the symbol grounding problem?

This Smart speaker is connected to the AC via Bluetooth.

Which means, the AC is part of the Smart speaker system.

In other words, it controls part of its system with words; instead of replacing objects in the real world into symbols and controlling them.

It can be said that the remote of the AC now adopts voice recognition.

Indeed, Smart speakers will answer to the question ”tell me the weather forecast” with replies like “sunny” or “rainy”; but all it did was to search for the forecast and reply the search result in words.

In their system, symbols and symbols are connected – they don’t necessarily understand the meaning of “sunny” or “rainy” in real life.

This is the same for the story of the robot ”What does ‘creating a mind of robot’ mean?”.

For instance, when you come back home from work and say to the speaker:

“thanks to your advice this morning ‘don’t forget to bring your umbrella as it will rain today. I didn’t get wet today”, it will not reply:

“you’re very welcome sir. I am very happy that the master didn’t get drenched”.

For AI to be able to hold a conversation like this, it needs to understand such facts below:

When it “rains”, people get wet from water.

To get water is something negative, and this can be avoided with an “umbrella”.

The reason why the master brought an “umbrella” is because I told the master before he went to work “don’t forget to bring your umbrella”.

Now the master expressed his gratitude to me.

The response to appreciation is “you’re welcome”.

As the master did not get into negative happenings, I developed this emotion of happiness.

From understanding these facts, an Smart speaker will be able to say

“you’re very welcome sir. I am very happy that the master didn’t get drenched”.

The purpose of ROBOmind-Project is to make AI understand the meaning of human languages. What makes it possible is to reveal human consciousness.

ROBOmind-Project assumes that human consciousness does not directly perceive the world, but builds it into a virtual world and perceive the virtual world – explained in the “Hypothesis: consciousness’ virtual world”.

Let me apply this to the explanation of how to identify ”zebras”.

First, it will take a picture of the real world and generate a 3D model of the object in the real world. This applies to the building of a virtual world.

Next, it will compare the 3D model generated from the real world and the one registered in the system as a “horse” for knowledge. Once it identifies the object as a ”horse”, it will replace it into a symbol “horse” in the system.

Once it’s been replaced, it can now control other symbols that are associated with the symbol “horse”.

For instance, if it identifies the patter of the ”horse” as ”stripes”, the symbol “horse” has the symbol ”stripes”. This description matches to the explanation ” zebras are a type of horse with stripes” in words (symbols), it can identify it as a “zebra”.

This is the symbol controlling.

This symbol controlling is what consciousness is in charge of processing.

The former conversation ” don’t forget to bring your umbrella” is only possible with symbol controlling.

In summary, unconsciousness is in charge of identifying the real world in 3D and replacing it into symbols, and consciousness is in charge of controlling the symbols.

So, consciousness does not directly identify the real world outside, but identifies what has been converted into symbols in a virtual world.

If you do not understand what I mean by this, please read the post ” Hypothesis: consciousness’ virtual world” as well.

How to Define the Meaning of Words

How to Define the Meaning of Words What does “creating a mind of robot” mean?

What does “creating a mind of robot” mean?