How Can We Reveal Our Mind From Observing Our Brain

How Can We Reveal Our Mind From Observing Our Brain

Will we know where our mind is placed once all the neural circuits are revealed?

Don’t you have such moment when you think there is a little brown sandal on your entrance but it is in fact a huge cockroach?

(Never!)

What is being processed in our brain when we look at things and identify them as “this is 〇〇”?

Among neural cells of brain, there are cells that react to the angle when exposed to vertical lines.

Cells that only respond to horizontal lines, cells that only respond to perpendicular lines, and cells that only respond to 45°lines… Each cell has which angle to identify.

It has been revealed that some cells only respond to faces of certain animals, not just simple forms like angles.

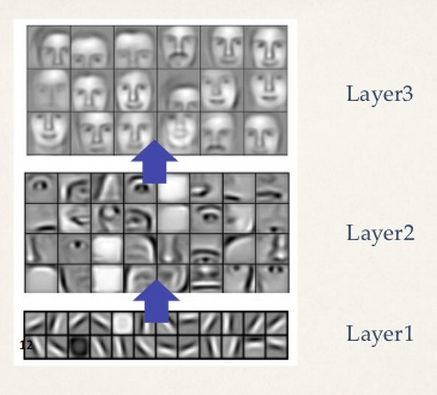

From this, it can be said that when we look at something we identify what it is from its details – angles of lines to bigger parts in steps. This is the same with image recognition deep learning employs.

As explained in “The Impossible Limit of Deep Learning”, in deep learning, it is composed of multi-layered neural networks: in the first layer, it identifies details such as tilt of lines, round and squares, then goes on to the shape of eyes or ears, and finally, it identifies the whole thing like faces.

From this, it can be said that deep learning is in fact capable of processing almost the same way we humans do in our brain.

Lately, AI chips with specialization of this deep learning are coming to the market and being in the spotlight.

So let’s consider how much we can reveal about consciousness or mind by comparing this AI chip and human brain and by revealing our brain’s neural cells.

First, let me explain about general computers.

There are basically two types of computer by its structure.

The first one is Von Neumann type computer with CPU which is usually installed in normal laptops.

It is characterized by its operation by program.

CPU has various arithmetic circuits like addition and multiplication, and according to its program, it executes calculations in order.

For instance, to execute the calculation ”(1+2)×4”, CPU needs to execute ”1+2” by using the addition circuit and memorize the result ”3”. Then, it will execute ”3×4” by using CPU’s multiplication circuit.

Another type of computer is non-Von Neumann type computer.

For example, GPU that exclusively handles image processing belongs to this category.

In image encoding and decoding processing, it needs to do addition and multiplication for hundred and thousand times repeatedly.

It is also plausible to use CPU to calculate by its program, it will be extremely inefficient to do similar calculations many times on CPU.

In that case, it will be more efficient to create a special arithmetic circuit.

That is to say, to create a circuit that connects necessary addition circuits and multiplication circuits vertically.

With this circuit, we will be able to complete the calculation in just one time for what it would take for hundred and thousand times, making extremely speedy processing.

Calculation by deep learning involves simple calculations repeatedly, it was decided that instead of using CPU, it will be more efficient to use the exclusive chip for non-Von Neumann type computer. This is how AI chip came about.

Currently, the biggest company in the market of AI chip is NVIDIA; they are famous for GPU manufacturing for this context.

Non-Von Neumann type computer has the content for calculation wired as arithmetic circuit, it is also called wired logic.

It can be said that this is the same neural circuit inside our brain.

We will be able to create computer that has consciousness or mind once we reveal all the contents of processing in our brain’s neural circuits and create a computer chip that possesses the same functions.

This attempt and the likes have been already undergoing in the world, and in Japan, Dwango is the main driving force in this project to actualize all the functions of our brain on computer called the Whole Brain Architecture Initiative (WBAI).

Now here comes the main issue.

Non-Von Neumann type’s AI chip that adopts deep learning is capable of looking at something and identifying what it is.

This is what we looked at in “What Is consciousness In The First Place?”, in the case of a frog, if it sees a fly in front of it when it is hungry, it will extend its tongue and catches it to eat it.

This can be actualized by connecting the recognition processing of ”to identify the fly” and the action processing of ”to catch the fly with the tongue extended”.

This is a simple logic – to connect the recognition of “the fly” and the action ”to catch the fly”.

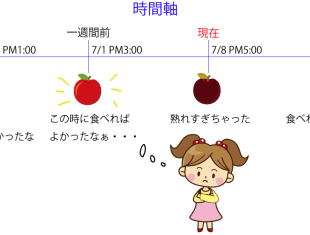

In the case of humans, for instance, when we identify ”an apple” we can ponder ”should I eat the apple now” or ”should I eat it for snack time at 3”. But frogs’ brain is not capable of this.

How come is it like this?

It is because there is only this simple choice that the frog will decide from – to catch it and eat it or do nothing – after identifying it as a “fly”.

When we humans think “which decision should I take?”, we need to be able to control the identified “apple” in our head freely.

Controlling inside the head is symbol processing.

Deep learning is capable of the processing from looking at an object to identifying what it is.

As long as it can identify what the object is, it is possible to convert it into symbols.

After converting it in symbols, it will think “which decision should I take?” in the head.

What should one do to think “which decision should I take?” in one’s head?

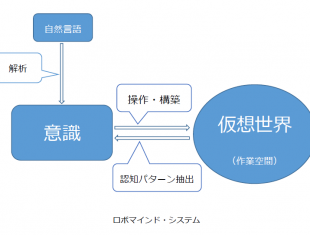

This is basically what I explained in ”ROBOmind-Project System Overview”, it needs to build a virtual world and control symbols on there.

A virtual world is a virtually-built world which is a replica of the reality that we see and feel.

To think “which decision should I take? ” involves simulating events on a virtual world.

Building a virtual world involves far more complex processing than identifying the fly, and determining whether or not to catch it.

Non-Von Neumann type computer is incapable of executing this complex processing.

It will need Von Neumann type computer that uses program.

From this point forward, I am going to explain my thesis: most probably, the brain structure of humans is partially Von Neumann architecture.

In other words, human brain adopts non-Von Neumann architecture when it sees things and identifies it; when it symbolizes and processes symbols it is using Von Neumann architecture.

One characteristic of non-Von Neumann type computer is its speed.

In a matter of a second, it identifies the fly and catches it with its tongue.

Nevertheless, non-Von Neumann type computer cannot take any complex processing.

Von Neumann type computer is capable of more complex processing like simulation.It can simulate future actions or recall past events.

As I explained in “Time does not exist in reality. Time is a fantasy”, time memorizes the current happenings in episodic memory, and only when one recalls it later, one can identify the flow as “time” that runs from the past to the present.

Animals are only capable of recognizing things in front of their eyes and of acting directly related to the said objects – hence they are only capable of identifying “the present” and they cannot understand concepts of time like past and future.

To understand ”concepts” such as time, it needs the brain of Von Neumann architecture.

Then comes “languages”.

The biggest difference between animals and humans is languages.

This “language” is only about signal processing.

Being able to speak “languages” indicates that it needs the brain that adopts Von Neumann architecture.

The difference between humans and other animals, aside from primal differences in part of the brain structure, lies whether one’s brain adopts the brain structure of Von Neumann architecture.

The biggest characteristic of Von Neumann type computer is its ability to execute programs.

By replacing program, it becomes capable of every task.

However, if you just observe processing by Von Neumann type computer from outside, it is almost impossible to understand what program is being executed.

Even if you revealed every flow of signal inside the CPU, you would not know which program the CPU is executing – Excel, Word, or Super Mario Bros.

Non-Von Neumann type computer has its arithmetic circuit along the content of processing, if you track the flow of signals, you will come to know what is being processed.

Thanks to the development of brain science since the end of 20th century, the mechanism to identify objects in our brain has been revealed considerably.

However, most of the functions are not unique to human beings but shared with many species.

Little is known about languages, consciousness and the mechanism of mind, something humans only possess.

The cause, the function we humans only possess, might have been actualized in the brain structure of Von Neumann architecture.

Speaking from another perspective, humans might have acquired the brain structure of Von Neumann architecture sometime during the evolution.

That might be why it is only humans that are capable of talking.

Moreover, such Von Neumann architecture cannot understand what’s inside the processing by observing from outside.

We cannot know how subjectivity arises in our brain by observing our brain (The Hard Problem of Consciousness).

To actualize AI with consciousness, it is not enough to reveal the structure of the brain.

To actualize AI with consciousness, we need to make a hypothesis of the model of mind and examine it by executing it on program.

The Impossible Limit of Deep Learning

The Impossible Limit of Deep Learning What Is consciousness In The First Place?

What Is consciousness In The First Place? ROBOmind-Project System Overview

ROBOmind-Project System Overview Time does not exist in reality. Time is a fantasy.

Time does not exist in reality. Time is a fantasy. Now that we solved the hard problem of consciousness… What’s next?

Now that we solved the hard problem of consciousness… What’s next?